Annotation Team Training

We recruited twelve annotators through a professional data-labeling service. All had prior experience with human body keypoint annotation. Before beginning work, each annotator completed a focused training session led by an internationally accredited para-athletics classifier. This preparation ensured that every team member understood the anatomical variation found in individuals with limb deficiencies and the requirements of our extended keypoint schema. Our annotation team underwent extensive training to ensure accurate and consistent labeling of the dataset.

Training included:

- 12 annotators recruited from a professional data-labeling service, with prior experience in human body keypoint annotation

- All annotators completed training sessions led by an internationally accredited para-athletics classifier.

- Understanding different types of limb deficiencies

- Familiarization with our keypoint schema

- Practice sessions with sample videos

- Quality control measures

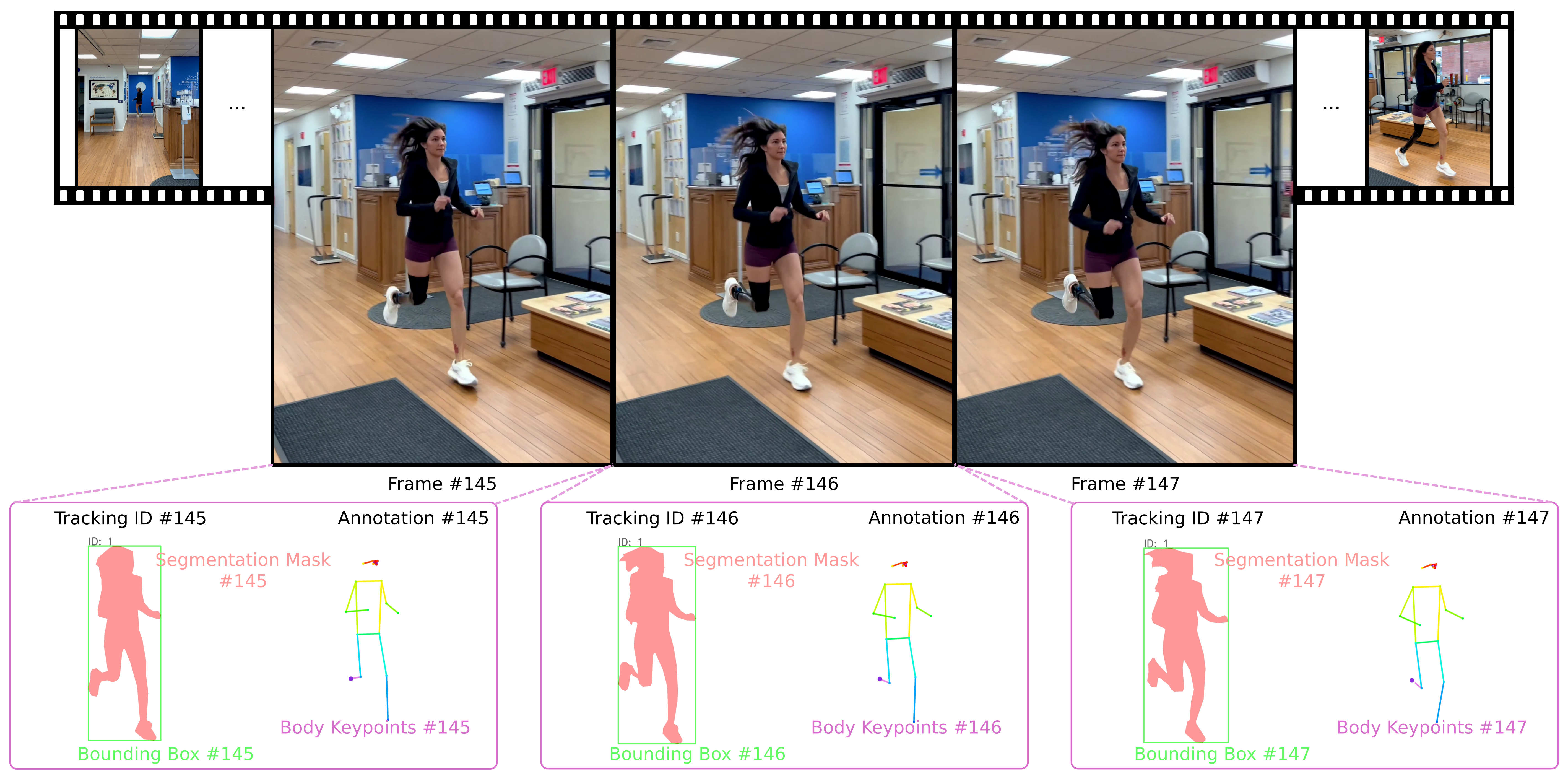

Figure 1: Our annotation workflow and quality control process

Bounding Box, Segmentation Mask and Tracking ID

We use the open-source platform X-AnyLabeling together with Segment Anything 2 promptable segmentation to generate initial masks. For each individual, annotators first merge all segmentation masks sharing the same tracking ID in a given frame and compute the tightest enclosing bounding box around that merged mask. They then refine a pixel-accurate segmentation mask and confirm the persistent tracking ID. SAM2’s ability to generalize zero-shot to unseen residual-limb shapes cuts mask-drawing time by over 50%. To maintain high quality, we enforce an 80% accuracy threshold: after one annotator finishes a batch, a second annotator cross-checks it. Any batch with more than twenty percent of masks showing clear errors is returned for revision.

Each frame is annotated with:

- Bounding boxes around each individual

- Segmentation masks for precise body part localization

- Tracking IDs to maintain identity across frames

Keypoint Annotation Process

To capture each subject’s unique anatomy, two trained annotators and one accredited para-athletics classifier review all 401 individuals’ disability profiles and agree on a personalized 25-keypoint schema. Each schema is represented by a 25-element presence mask that indicates which of the seventeen COCO landmarks and eight residual-limb endpoints apply to that individual. For example, a mask value of 0 for the right-ankle keypoint (because it is absent) and 1 for the right-knee keypoint (because it is present). Annotators then label every frame according to that schema, marking each keypoint’s image coordinates. We follow the COCO visibility convention: any landmark that is within the frame but occluded receives a visibility flag of 1. We require an 80\% point-level agreement rate between annotators on 5\% of sampled data, where ``agreement” means no visually apparent errors in landmark placement. If more than 20\% of sampled points in a batch fall below this threshold, the original annotator must correct the entire batch. This structured, expert-guided workflow ensures unambiguous placement of residual-limb keypoints and delivers highly reliable pose data.

Our keypoint annotation process includes:

- Frame-by-frame annotation of all visible keypoints

- Special handling for residual limbs and prosthetics

- Occlusion labeling for partially visible keypoints

- Inter-annotator agreement checks

Figure 2: Example of keypoint annotations on a video frame

Quality Assurance

To ensure annotation quality, we implemented:

- Multi-stage review process

- Random sampling for quality checks

- Continuous feedback and retraining

- Inter-annotator agreement metrics